AI in the Arena: Safety or Surveillance?

Introduction: The Dual-Edged Sword of AI

AI is no longer a futuristic concept for stadiums—it’s here, embedded in the way fans enter, move, and are monitored inside arenas. From facial recognition at turnstiles to crowd analytics tracking movement patterns and AI-powered threat detection cameras scanning for unusual behavior, artificial intelligence has become a visible part of the sports security landscape. Stadium operators view it as a means to enhance efficiency and bolster security in environments where tens of thousands of people gather.

The NCS4 2025 Spectator Sports Safety and Security Survey reveals the complexity of how fans view this shift. On the one hand, 60% of fans believe AI makes them safer, associating it with quicker risk identification and better-controlled environments. On the other hand, 55% of those same fans express concern that AI invades their privacy. This split demonstrates the growing tension between safety and personal freedom in public spaces.

That tension forms the central theme of this analysis: while AI can enhance physical security, it simultaneously introduces new cyber and ethical risks. Biometric data can be stolen. Surveillance systems can be hacked. Deepfakes or manipulated AI feeds could trigger panic or confusion in crowded environments. As stadiums integrate AI, they must not only consider its promise but also confront the vulnerabilities and ethical dilemmas it presents.

Why Fans Believe in AI’s Safety Benefits

Many fans see AI’s rise in stadiums as a natural upgrade—a tool that can make crowds safer and more manageable. From facial recognition turnstiles to AI-driven behavior analytics, the promise is that these systems can preempt threats, enhance security, and reduce human error. Stadiums tout faster entry lines, fewer ticketing bottlenecks, and earlier detection of suspicious activity as direct benefits of AI deployment.

The adoption of facial authentication technology in U.S. sports venues demonstrates that the belief in AI’s safety value is not merely speculative. Multiple Major League Baseball teams are already using or testing “biometric entry” systems that allow fans who opt in to upload a selfie and gain access via facial matching instead of scanning a ticket. Some stadiums frame this as not only a security layer but a frictionless, modern fan experience.

Yet these systems often come with trade-offs. Even as fans accept biometric entry as a security measure, privacy concerns shadow the technology. Stadium operators state that facial images are tokenized and deleted immediately, but many fans remain uneasy about consent, data reuse, and the potential for abuse. The perceived safety that AI provides depends heavily on how transparently and responsibly it is deployed.

In short, fans believe AI can enhance safety because it offers speed, automation, and proactive threat detection. But whether that belief holds up depends on whether venues can deliver those benefits without compromising trust or exposing new risks.

The Privacy Tradeoff: What 55% of Fans Fear

The security benefits of AI in stadiums are only half the story. As technology expands into every corner of venue operations—from entry gates to concession stands—the privacy cost becomes harder to ignore. 55% of fans surveyed already express concern that AI invades their privacy. That hesitation is no coincidence.

One of the leading concerns is how biometric data is collected, stored, and repurposed. Stadiums using facial recognition or facial authentication frequently convert images into digital tokens for verification. However, critics worry: what happens when those tokens are stored in the long term or linked to personal profiles? Stadium policies often promise that images will be deleted immediately; however, the language surrounding deletion, reuse, and secondary uses is sometimes vague.

Another tension lies in the “normalization” of surveillance. When fans begin to accept facial scanning as a routine part of game entry, broader deployment becomes less visible, less scrutinized. Privacy advocates warn this could lead to expansion beyond entrance control—into tracking movement, enforcing bans, or integrating law enforcement privileges.

Stadiums have also faced pushback from fans’ rights groups. In New York, protesters gathered at Citi Field to oppose the Mets’ use of facial recognition technology, deeming it invasive and unnecessary. Meanwhile, the Football Supporters’ Association in the UK officially voted to oppose biometric turnstile systems, citing demographic bias and a lack of meaningful fan consultation.

Legally, many venues are operating in murky territory. In the U.S., there is no overarching federal regulation of biometric data, meaning each state may approach it differently. Some states (like Illinois) enforce strong biometric privacy laws requiring notice, consent, and limits on data retention—laws that could have direct implications for sports venues.

In effect, fans aren’t just worried about whether their data is collected—they’re questioning who controls it, how long it lives, and what it might be used for in the future. The promise of faster entry and enhanced safety must be balanced against the potential for surveillance creep, bias, and abuse.

Cyber Twist: When AI Becomes the Target

AI-powered systems—especially biometric and surveillance tools—aren’t invincible. As stadiums adopt facial recognition, crowd analytics, and automated monitoring systems, attackers are actively probing for potential weak points. Below are key vulnerabilities and real-world incidents that show how AI systems can be compromised, manipulated, or weaponized.

Facial Recognition Backdoors & Adversarial Attacks

Modern facial recognition models are sometimes vulnerable to backdoor triggers—tiny perturbations or patterns that cause the AI to misclassify or bypass detection altogether. Researchers demonstrated this in a study called FaceHack, where subtle changes to facial features (or overlays via software filters) could evade or confuse security systems.

Another example: “Invisible mask” attacks, which use infrared light—undetectable to the human eye, can fool recognition systems into misreading or ignoring faces entirely.

These techniques matter because a motivated attacker could craft supplements (e.g., glasses, makeup, or software filters) that allow them to slip through facial authentication or spoof identities inside stadium systems.

Biometric Data Breaches & Privacy Fallout

Even secure systems can be breached. The Australian company Outabox, which provided facial scanning kiosks for clubs and venues, suffered a breach exposing over a million biometric records.

This breach highlights a fundamental issue: once biometric data is stolen, it’s not like a password that can be changed. Your face, your iris, your gait—they’re constant. A breach of biometric systems is a long-term vulnerability, especially when that data is stored in centralized servers with multiple points of access.

A related case: Clearview AI was fined by Dutch authorities over building an extensive facial recognition database scraped from the web without consent. This highlights the regulatory risk associated with digital surveillance that heavily relies on the aggregation of public and private data.

Deepfake and Synthetic Video Manipulation

Artificial intelligence can also be used to generate convincing fake video or audio in real time. In high-stakes environments like stadiums, a manipulated jumbotron feed or public announcement could trigger panic, false alarms, or orchestrated disinformation.

In broader contexts, deepfake-based fraud has already caused massive financial loss—one example saw executives allegedly tricked into transferring millions by believing they were receiving real-time instructions from leadership.

In stadium settings, such attacks could distort crowd behavior, misidentify threats, or amplify fear by broadcasting false content or alerts.

Supply Chain & Vendor Exploits

Many stadiums depend on third-party vendors for security systems, cameras, AI modules, and cloud services. Compromise at the vendor level—such as outsourced camera firmware, insecure APIs, or weakly secured models—can cascade into full-system vulnerabilities.

For example, the startup Verkada (security cameras + AI systems) was once breached, giving attackers access to video streams across many organizations. While not stadium-specific, it serves as a cautionary tale about trusting vendor ecosystems unquestioningly.

Live Surveillance Used Without Oversight

Outside the sports arena, real-world incidents have revealed how AI surveillance can creep beyond its intended use. In New Orleans, a network of more than 200 facial recognition cameras reportedly monitored public streets and sent real-time alerts to police—violating local limits on surveillance.

That case demonstrates how systems deployed for security or access control can be repurposed, scaled, or abused—venturing far beyond initial design.

Summary & Risks

AI systems are not just passive tools; they are active targets.

Attackers can exploit backdoors, adversarial inputs, or weak vendor links to bypass detection.

Breached biometric data presents long-term vulnerabilities.

Deepfakes and synthetic media can turn “watchful eyes” into misinformation vectors.

Systems deployed for safety can evolve into surveillance infrastructure if unchecked.

Over the remainder of this post, we’ll discuss how stadiums and operators can defend AI systems, build in resilience, and protect both safety and privacy—because allowing AI to self-police without guardrails is a risk that no fan should take.

Case Studies & Lessons Learned

To understand the risks (and opportunities) of AI in stadiums, it is helpful to ground theory in real-world deployments and controversies. Below are illustrative case studies showing how venues, governments, and critics have navigated (and sometimes stumbled into) the challenges of AI surveillance and facial recognition.

Citi Field & Fan Protests Over Facial Recognition

At New York’s Citi Field, the Mets faced public backlash when protesters gathered to raise awareness about facial recognition systems being used for fan entry. Critics argued that the technology normalizes mass surveillance under the guise of safety.

This case highlights how fan trust can fracture quickly if biometric systems are deployed without transparency.

NFL and Facial Recognition for Workers, Not Fans

In August 2024, Reuters fact-checked new facial recognition programs in the NFL, clarifying that they were only used for stadium workers, staff, and media personnel—not for general attendees.

While this limits exposure, it also underscores a middle-ground: partial adoption can help manage risk—but the boundary must be clearly communicated.

Brazilian Stadium Uses Facial Recognition to Arrest Wanted Individuals

Some stadiums in Brazil have successfully implemented facial recognition for security purposes, not just for ticketing. During matches, cameras identified individuals with outstanding warrants, leading to their arrest.

This case demonstrates a more aggressive approach, combining surveillance with law enforcement, which raises questions about consent and oversight.

Paris Olympics: Algorithmic Video Surveillance

During the 2024 Paris Olympics, authorities utilized an AI platform called Cityvision, which connected over 485 cameras citywide to detect behaviors such as crowd surges, abandoned objects, and sudden movements. The deployments drew criticism for their lack of transparency and legal authority—some surveillance decrees were published only after the cameras were already live.

This demonstrates the tension between data-driven security and democratic oversight—the “can we?” frequently outpaces the “should we?”

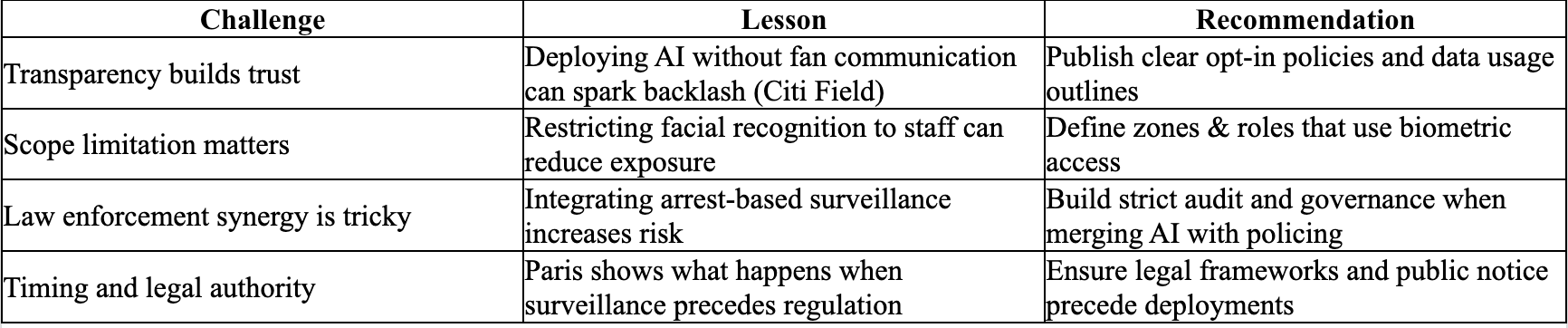

Lessons & Takeaways for Stadium AI Deployment

From these cases, several lessons emerge:

Balancing Safety and Surveillance

Artificial intelligence has undeniable value in enhancing stadium safety. It can reduce wait times, spot suspicious behavior faster than human eyes, and automate threat detection across massive crowds. But without guardrails, the very technology designed to protect fans risks eroding their trust. The NCS4 survey shows this tension clearly: while most fans welcome AI for its security benefits, more than half fear the privacy trade-offs. Bridging that gap requires proactive safeguards.

1. Transparent Data Use Policies

Fans need to know exactly what’s being collected, how it’s stored, and when it’s deleted. Too often, venues emphasize the speed and convenience of AI entry systems but bury the details of data handling in legal jargon. Clear, plain-language explanations—paired with opt-in consent—can turn skepticism into trust. Transparency doesn’t just ease concerns; it strengthens compliance with growing state-level biometric privacy laws.

2. Independent Oversight of AI Surveillance

Leaving AI entirely in the hands of vendors or stadium operators invites mission creep. Independent review boards, privacy commissions, or third-party auditors should regularly evaluate how these systems are being used, ensuring that their scope stays limited to safety and doesn’t drift into surveillance for profit, policing, or unauthorized tracking. Oversight isn’t just ethical—it’s a strategic tool for protecting brand reputation when public trust is on the line.

3. Secure Storage and Anonymization of Data

Biometric and behavioral data are high-value targets. A breach of facial recognition records or movement patterns could expose fans in ways far more damaging than a stolen password. Stadiums must invest in encrypted storage, decentralized systems where possible, and anonymization protocols that strip personally identifiable information once safety checks are complete. Data minimization—collecting only what is essential—should be the standard, not the exception.

4. Cybersecurity and Privacy by Design

Ultimately, AI surveillance in stadiums cannot be treated as a “bolt-on” feature. It must be integrated into a broader cybersecurity and privacy framework from the start. That means applying the same rigor that organizations already use for financial systems: penetration testing, vendor vetting, patch management, and continuous monitoring. Cyber resilience needs to be baked into every AI system deployed, not applied after the fact.

Call to Action: Stadiums must treat AI not just as a tool, but as a system that demands cybersecurity and privacy by design. Without clear safeguards, AI risks trading physical security for digital vulnerability. With them, however, AI can become a trusted ally in keeping fans safe without sacrificing their rights.

Conclusion: The Future of Watching Fans

Artificial intelligence has the potential to make stadiums safer, smarter, and more efficient. From quicker entry points to real-time threat detection, the benefits are real—and fans are already experiencing them. But the same systems that promise safety can, if left unchecked, become vulnerabilities themselves. Hackable databases, adversarial AI attacks, and deepfake manipulation aren’t distant hypotheticals; they’re real risks waiting for poorly secured systems.

The challenge, then, isn’t whether to use AI—it’s how to deploy it responsibly. With transparency, oversight, and cybersecurity built in from the start, AI can be a trusted guardian of fan safety. Without those safeguards, it risks eroding trust, compromising privacy, and creating entirely new threat vectors.

When AI is watching the crowd, who’s watching AI?

Sources:

Stadium Tech Report – Stadiums expanding use of biometric technology, especially facial authentication

Athletic Business – Data and privacy concerns surround facial recognition access technology at sports venues

WIRED – Stadiums Are Embracing Face Recognition; Privacy advocates protest

ID Tech Wire – Football Supporters’ Association opposes facial recognition at stadiums, citing privacy and bias risks

NYSBA (New York State Bar Association) – Privacy vs. Security: The legal implications of using facial recognition at entertainment venues

ACLU – Face Recognition Threatens to Replace Tickets, ID at Sports Events and Beyond

arXiv – FaceHack: Adversarial Attacks on Facial Recognition Systems

arXiv – Infrared-based Invisible Mask Attacks Against Face Recognition Systems

WIRED – Outabox facial recognition breach exposes over a million biometric records

AP News – Clearview AI fined in Netherlands over facial recognition privacy violations

Incode – Top 5 Cases of AI Deepfake Fraud from 2024 Exposed

Wikipedia / Reuters – Verkada hack exposed live feeds from 150,000 cameras

Washington Post – Live facial recognition monitored New Orleans streets in real time, raising legal questions

Reuters Fact Check – NFL facial recognition system only for workers, not attendees

Le Monde – How algorithmic video surveillance was used during the Paris Olympics